I’ve been an Apple “fanboy” for almost 20 years now. Ever since I got my first MacBook Pro back in 2005. I’ve been developing for iOS for the past 15 of those years, and every June, I get excited for WWDC like it’s Christmas morning. 🤓 This year? Honestly, it felt a little underwhelming overall. But one thing that really stood out, and the reason I’m writing this first blog post, is Apple’s new Foundation Model framework for iOS 26.

It’s Apple’s first on-device large language model (LLM). It’s private. It’s secure. It lives right on your device. And it’s free to use! That’s pretty cool. You get a free LLM that you can chat with or prompt, right on the device.

Recently, I’ve become fascinated, and honestly, a bit addicted to all things AI and I’ve been trying to find new ways to integrate LLMs into my iOS development. So I couldn’t wait to get my hands on this local model to play with in an iOS app.

First Impressions: Playing with Apple’s Foundation Model

I downloaded Xcode 26 and the iOS 26 + macOS Tahoe betas (you’ll need the macOS beta to actually build with the model). I built a quick little sample app, a daily focus time creator, that I’ll probably write about in a separate post. Getting my hands on the framework gave me a real feel for its strengths and, more importantly, its limitations.

What is this model?

The model itself is a 3-billion-parameter, 2-bit quantized model. It has a context window of just 4,096 tokens, and that’s a combined window for both your input and the model’s output for the entire session. That’s… not a lot. This isn’t a ChatGPT-style model. The use cases Apple keeps highlighting in their docs, labs, and WWDC sessions are very specific: text summarization, content tagging, game chat dialogue, and other kinds of light-weight natural language processing.

Model Limitations

The limitations are what you’d expect from a model of this size. It doesn’t have world knowledge, it only supports text input and output, you can’t analyze large data sets due to the small context window, and the developer needs to perform session transcript management if they want to continue a session that has reached its token limit.

Strengths of Apple’s Foundation Model

Strength #1: Truly Structured Data with @Generable

This is where things get interesting. One of the biggest challenges with small, on-device models is getting reliable, structured output. Anyone who has tried to get a tiny model to consistently output valid JSON knows the pain. You often have to massage malformed data or just retry the request because the output on smaller models is often unreliably structured.

Apple’s framework solves this beautifully with a new Swift macro: @Generable. You can define a local struct and ask the Foundation Model API to populate it with generated content. The framework handles the hard part, ensuring the data is structured exactly as required by your data model. You can help the model understand each property’s purpose with a description in the @Guide macro. There are a limited number of supported data types, but for what it does, it’s incredibly powerful. This is a huge win and shows a bit of Apple’s ingenuity.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

import FoundationModels @Generable struct BookCatalog { @Guide(description: "A list of books", .count(3...5)) var books: [Book] @Generable struct Book { var id: GenerationID @Guide(description: "The title of the book") var title: String @Guide(description: "The full name of the author") var author: String @Guide(description: "The genre or category of the book") var genre: String @Guide(description: "The year the book was published", .range(1900...2025)) var publicationYear: Int } } |

The framework supports generating content with basic Swift types like Bool, Int, Float, Double, Decimal, and Array.

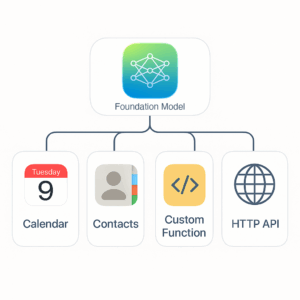

Strength #2: The Real Superpower… Tool Calling

As cool as structured output is, in my opinion, the true value of the Foundation Models framework is that the model can invoke local Tools. Think of these as Swift functions that the model can request based on natural language input. You define what the tools are and expose them to the model. These functions, or Tools, can open up capabilities to the model like checking local storage, calling an API, hitting a database, or even triggering custom app logic.

The model can process natural language, determine which tool to call, and then pass the data back to the model for further processing or formatting into a structured output. Of course, all of this still has to happen within that tight 4,096 token limit, so you have to be strategic.

But think about the possibilities. The model itself doesn’t need to be all-powerful if it can intelligently orchestrate the powerful tools you give it.

The API also lets you stream tokens as they’re generated, which is standard for driving real-time chat UI. But when combined with the @Generable macro, you can actually stream parts of your struct data type.

This creates a really cool visual effect where your UI can populate incrementally as the model produces the content. You can have placeholders that fill in as the data becomes available, creating a dynamic and responsive user experience that goes way beyond a simple chat window.

Let’s See Some Code

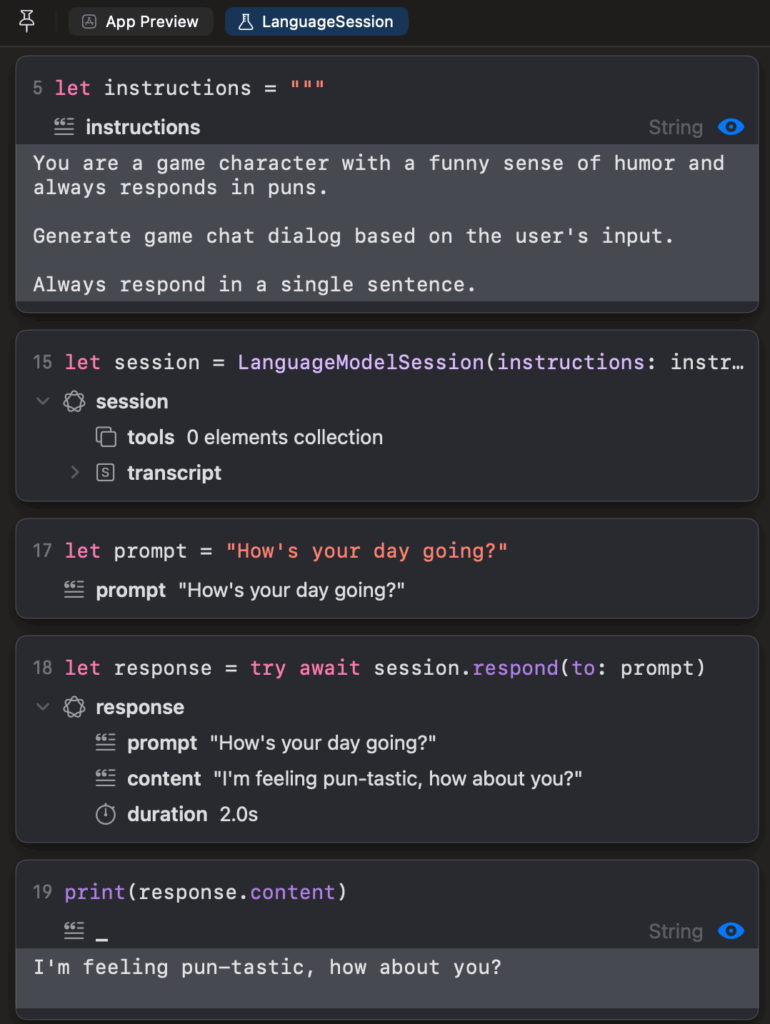

The API itself is surprisingly easy to use. Here’s a quick Playground snippet showing how you might kick off a session to generate a summary. It’s clean, simple, and gets the job done.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

import FoundationModels import Playgrounds #Playground { let instructions = """ You are a game character with a funny sense of humor and always responds in puns. Generate game chat dialog based on the user's input. Always respond in a single sentence. """ let session = LanguageModelSession(instructions: instructions) let prompt = "How's your day going?" let response = try await session.respond(to: prompt) print(response.content) } // Output: // "I'm feeling pun-tastic, how about you?" |

Using #Playground gives you a canvas to inspect the input, tools usage, response duration, and the response output.

The same convenience Apple gave us with SwiftUI Previews, they’ve now provided with the Playgrounds for our model sessions.

So… Should You Use It?

Absolutely! But how you use it depends on your use case. Don’t think of this as “ChatGPT in your pocket.” Instead, think of the Foundation framework as a highly-efficient formatter and extractor; a lightweight engine capable of translating simple natural language requests into the precise data structures and function calls your app already knows how to handle.

It’s not going to write essays or answer deep technical questions. It doesn’t have broad world knowledge or heavy reasoning power. But it’s fast, secure, and free. And for a lot of apps, that’s enough.

Final Thoughts

I’ve been having a lot of fun pushing the limits of this new framework on the beta. It’s not the all-knowing AI assistant some might have hoped for, but it’s a powerful, private, and surprisingly capable tool for very specific tasks. Its strengths lie not in its own knowledge, but in its ability to format data and orchestrate the tools you provide.

In my next blog post, I’ll share a demo of that daily focus app I mentioned, showing how to hook the Foundation framework up to a real tool to build a practical feature.

* * *

For a much deeper dive, I highly recommend checking out Apple’s official machine learning website. The research paper gives incredible detail on the model architecture and training.

Updates to Apple’s On-Device Foundation Models

* * *

Thanks for reading, and see you in the next one!